starting to think about kelly

Continuing from here:

http://www.trade2win.com/boards/trading-journals/140032-my-journal-3-a-61.html#post1797886

Now that, with my ratio and with my Monte Carlo VaR estimator (the "blender"), I've got the portfolio theory for the present all figured out, I need to start thinking in terms of Kelly Criterion.

I think everyone should think in terms of Kelly, because the chance of losing everything is always there, so you can never invest your whole capital. But then things might change for those investing in bonds or similar.

Anyway, I need Kelly. I see Kelly as something that will increase the chance of survival of my capital. There's always a chance of blowing out, even with Kelly, because:

1) the systems could stop working

2) you could get very unlucky and have a huge drawdown

3) the systems could be or become correlated (related to the previous points)

4) most importantly: Kelly assumes infinite divisibility and future contracts do not allow that (especially with a small capital)

But it takes longer to blow out your account if you use Kelly. And it allows you to grow it faster. This is exactly what we didn't do with the investors. We kept going towards the cliff at the same speed throughout the drawdown, at the end of which they stopped trading. Wrong, if you consider what I said: there's always a chance of blowing out, no matter what: even if you did everything right.

Back then instead we were thinking in terms of Historical Var, and as if "maximum drawdown" meant something, as if you just double the maximum drawdown and that value cannot be exceeded.

The way I'd approach this problem is to simplify everything first of all, because I can't think in any other terms. I can't use terms such as "second-order stochastic dominance" and "mixed linear integer programming problem". Those are terms for people busy showing off.

In plain and simple words, I am going to pretend that my bet is not each trade but each six months.

In a period of six months, or let's call it "in my bet", with the present portfolio, I have a chance of 80% of (average estimate) quadrupling my capital and a 20% chance of losing everything.

What I mean by not reinvesting. If you start at any day at random, with the present portfolio, and you trade for the next six months, my estimate is that I have a 20% chance of blowing out, and an 80% chance of quadrupling my account.

Having said this, let's see what Kelly says.

[...]

Damn!

Stumbling block...

[...]

I have either become retarded from too much work and burning out or this is a challenging concept, or both... what exactly does it mean to invest a fraction of capital when you're trading futures?

Ok, wait.

Ok, let's say I am trading X contracts/systems with Y capital.

This combination will cause me, as mentioned, at the end of the bet period, an 80% chance of quadrupling Y capital, and a 20% chance of losing it all.

[...]

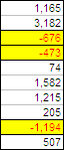

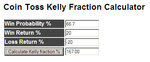

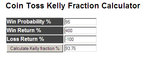

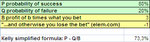

Ok, I should invest 73.33%, both according to my excel formula here:

http://www.trade2win.com/boards/att...401-my-journal-3-kelly_criterion_20120225.xls

And according to this calculator:

A Kelly Strategy Calculator

Results

- The odds are in your favor, but read the following carefully:

- According to the Kelly criterion your optimal bet is about 73.33% of your capital, or $733.00.

- On 80% of similar occasions, you would expect to gain $2,199.00 in addition to your stake of $733.00 being returned.

- But on those occasions when you lose, you will lose your stake of $733.00.

- Your fortune will grow, on average, by about 66.62% on each bet.

- Bets have been rounded down to the nearest multiple of $1.00.

- If you do not bet exactly $733.00, you should bet less than $733.00.

- The outcome of this bet is assumed to have no relationship to any other bet you make.

- The Kelly criterion is maximally aggressive — it seeks to increase capital at the maximum rate possible. Professional gamblers typically take a less aggressive approach, and generally won't bet more than about 2.5% of their bankroll on any wager. In this case that would be $25.00.

- A common strategy (see discussion below) is to wager half the Kelly amount, which in this case would be $366.00.

- If your estimated probability of 80% is too high, you will bet too much and lose over time. Make sure you are using a conservative (low) estimate.

- Please read the disclaimer.

But this is assuming that futures contracts are infinitely divisible. And they're not.

This is getting very complex, but not so complex that I can't figure it out. Maybe I'll need the help of the bath tub or the help of some dreaming. I'll take a little break for now, because I have to work for the office.

[...]

Back from working for the office.

I was thinking and having problem with this... let's try to remember.

Ok, assuming infinite divisibility of my capital and of futures contracts, and with these parameters:

1) trading 13 systems/contracts

2) with 10k

3) 80% chance of quadrupling at the end of period

4) 20% chance of losing everything

Kelly says I should invest 73.33%.

But if I invest 73.33%, the contracts do not shrink, so basically what Kelly is saying is that I should shrink the contracts to 73% of what I can afford with 10k. No, 73% of the combination I am examining, of 13 systems/contracts.

So, he's saying that with 10k, to maximize my return (and avoid the risk of blowing out) I should invest in 73% of the mentioned portfolio.

But mentioned portfolio is 13 contracts for 13 systems, and it cannot be reduced in any way:

1) I cannot get rid of some systems

2) I cannot get reduce the size of the futures traded

So where do I go from here?

I think I go that I am taking, as I thought and said from the start, in early January, a 20% chance of blowing out.

But we're thinking this thing for the future and not for now.

For now this is what happens: as capital increases, the % VaR decreases, and so does my chance of blowing out. The more the capital, the more I can take the variability of this portfolio.

Kelly will apply when I'll have much more and I'll want to scale up, and it will answer these questions:

1) when do i scale up?

2) when do I scale down?

Let's simplify a bit further. Let's say that Kelly says I should invest, for every 10k, not 73% but 50% of the mentioned portfolio. Similar rationale to the "half-Kelly" concept.

Since the mentioned portfolio cannot be divided, capital has to be doubled. This means that when I will have 20k, I will be correctly investing in the present portfolio.

But it also mean that, should I lose let's say 1k, then I should immediately scale down, but this cannot be done, so Kelly cannot even be applied for when I'll have 20k.

So let's start reasoning as if I had 40k. And let's call the present portfolio/leverage "portfolio 13" (it trades 13 systems and 13 contracts). And let's assume this portfolio 13 cannot be touched because it is an efficient frontier portfolio and it maximizes expected profit vs variability (or whatever you wanna call it).

When I'll have 40k of capital, since I need 20k for "portfolio 13", I will then be able to trade "portfolio 26", which is like portfolio 13 multiplied by 2.

Then, the minute my capital goes from 40k to 39k, I will have to scale down again to portfolio 13.

So ok, I think I pretty much answered my own question without the need to go in the bath tub.

Let's go over it again and explain it to myself better.

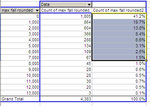

For every 20k I have, I can trade a portfolio 13, which means that as soon as I have 40k I can trade two of them, and... when I'll have 100k, I should be trading portfolio 13x5, which is "portfolio 65". Then, as soon as I lose 1k and all the way down to a capital of 80k, I'll have to switch to portfolio 13x4.

It's like excel's trunc() function. As soon as you have 1.9, it's as if you had 1. As soon as I have 39k, it's as if I had 20k, the capital for portfolio 13.

Ok, it's all clear. For now I don't have any more insights. What is clear is that I'll have to either wait until I reach 40k to apply this approach and scale up, or I should see if I can minimize this, and reduce it to a hypothetical portfolio 1, with 1 system and 1 future, which will allow me to speed up the scaling up and the compounding by dividing it into smaller steps.

The way I should go about it, and I'll keep thinking about this in the next few weeks and months, is to identify a system to add/drop for every level of capital.

Instead of going from portfolio 13 at 20k to portfolio 26 at 40k, I should identify one system to be added/dropped for every 2000 dollars made/lost.

A lot of thinking to do. What is interesting is that with the investors we did not prolong the life of our capital, because (mistakenly) we did not have a Kelly approach, but a maximum drawdown approach, which many other traders have, and that says you should keep investing not a fixed fraction of your capital (like Kelly) but a fixed capital all the way until your systems have proven to have failed by exceeding the maximum drawdown plus some % of leash allowed. The problems with this are that:

1) max drawdown doesn't exist - it's just a matter of probability. The maximum drawdown is infinite, even though it will have a small probability of happening. And if it happened it still would not prove your systems have failed.

2) Historical VaR is not an appropriate assessment of VaR, because the combination of systems together could produce a low drawdown due to luck, due to the length of backtesting.

Anyway, no need to mention more for me to understand that leverage should decrease as capital decreases. Just as it increased as capital increased.