eureka: replacing sharpe ratio with profit factor and trades with ROI

Ok, I was just now in the bath tub and as usual I could understand things more than anywhere else.

Improving my (empirical) scatterplot, rather than devising a portfolio formula

I figured out the ingredients and I figured out how to put them together, all of them, in one empirical scatter plot.

Agreed, it's not a magic formula like the sharpe ratio that claims to solve all problems, without doing it, but this is just the clearest scatter plot that I ever came up with. I claim less, but I do it right.

The ingredients that must be included in assessing a system's performance are the following, but with two important constraints:

time and money invested.

Limits of the sharpe ratio

In other words, it doesn't make sense to measure how much money a system makes with a different capital invested than another system, and it doesn't make sense to measure how much money it makes in a different period. Granted? Obvious? Well, guess what: the fabulous sharpe ratio doesn't even see this. If a system makes 2000 and loses 1000, it has the same sharpe ratio as another that makes 200 and loses 100. I see a different return on investment, but sharpe ratio doesn't. And guess what, if (

within the same period) a system makes 20 trades in a +200 and -100 in a sequence, or it makes 2000 such trades, sharpe ratio doesn't see that either (except for using stdev instead of stdevp, which causes a tiny difference).

So, these two assumptions are a great improvement compared to how I was doing things before, because I was using sharpe ratio, and yet sharpe ratio ignores them, and so basically it is not an effective tool in comparing different systems: yeah, amazing. I thought it was the ultimate tool to compare different systems - well, I was wrong, along with the whole financial community.

Ingredients, after making those two assumptions

So, given these two assumptions of equal time period and equal capital invested, here's the ingredients:

1) money made, profit

2) variability (zigs zags, ups and downs)

3) correlation, covariance, what have you

And these are just my ingredients for evaluating a specific system - I am not talking about putting them together, because I am far from capable of doing that on a theoretical level, even though I can do it on an empirical level with resampling and shortfall % calculations.

Correlation set aside for now

Despite the fact that these ingredients are not for portfolio (for which I'll keep using my empirical methods for now) but for appraising and comparing systems between one another, despite this, correlation would still be a good quality to assess, but there's a problem. Correlation (or covariance) is not as univocally measured as the rest of the characteristics I've mentioned. Indeed, I mean to find the correlation between the future and the system that trades it, if the system makes money consistently and the future has been rising consistently, such as gold for the last 10 years, or other futures, then my correlation estimate is not accurate. So, if I cannot do it right, I won't do it. I'll do it separately, manually, but it cannot be part of my scatterplot.

Empirical recipe (scatter plot)

Actually I could even come up with a complete formula, like the sharpe ratio, which measures more than the sharpe ratio, but I won't do it, because I want to make money and not write an academic paper that looks good, and is not understandable to anyone other than the other academics. I want to keep the ingredients separate, because I want to look at them, to be aware of them. So I won't use a formula, but I'll keep the ingredients separate in a scatter plot.

Replacing the sharpe ratio with profit factor

The first thing I will do, after all I've said against the sharpe ratio, is to get rid of it, and replace it with the profit factor. What does the sharpe ratio do? In simple words it divides the average profit by the average deviation (forget the rest of the bull**** that makes it look so legitimate and magical). What does profit factor do? Gross profit over gross loss. So the difference is that whereas the sharpe ratio compares money made to deviation (zigzagging, ups and downs), profit factor divides money made over money lost, which is none other than the deviation on the way down, isn't it? So someone tell sortino that he didn't have to invent the sortino ratio but could have gone back to the profit factor. Except profit factor is simpler than sharpe ratio and sortino ratio. So, goodbye academics, for now at least.

Profit factor on x-axis and ROI on y-axis

So, ok, on the y-axis of my scatter plot I will simply put profit factor, which accounts for the accuracy of my system, which was done by sharpe ratio until now. On the y-axis, instead of having the monthly trades, that might not give me a direct idea of the money a system can make, I will put the monthly profit divided by the margin required.

And I am done. It's not a lot, but it's clear. It's the clearest assessment I ever had of my systems.

It's not even accounting for drawdown, but sharpe ratio wasn't either. At least I've added a few things to my scatter plot, and simplified it by getting rid of unclear measurements like the sharpe ratio.

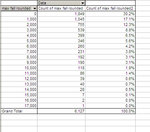

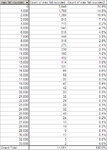

It has one thing missing though: it's the number of trades. I will have to check this manually. Compared to the previous scatter plot I've lost the number of trades:

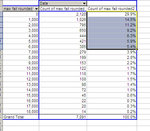

And I've gained Return On Account (monthly profit divided by overnight margin):

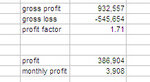

There's a lot more systems because, since I am not familiar with it yet, I will allow lower profit factors than I allowed sharpe ratios (after becoming familiar with it, I only allowed systems with sharpe ratios of 2 or above).

The profitability changed a lot of things, and this seems healthy to me. I am getting about twice as much information as I was getting before. For a few more months, there may be some surprising numbers by those systems that didn't trade very much and have very high profit factors.

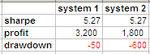

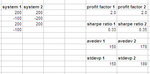

Let's compare those two charts I posted.

On the first one the 3 most accurate and reliable systems (measured on the y-axis by sharpe ratio) are the same as on the second one (measured on the y-axis by profit factor). So far no surprises, and this applies to all systems, because there's a very strong correlation between sharpe ratio and profit factor (due to how close the concepts are).

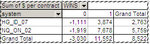

The surprise is obviously that, since I added the concept of Return On Investment (ROI), I now notice, on the x-axis, that NQ_ON_02 is almost 4 times as profitable as the other two (due to the margin requirements).

So, on both charts, the most reliable system is NQ_ON_02. The most profitable is, on the second chart, NG_ID_04, which also correctly showed from the first chart, which shows trades per month only because I changed it today from the previous one, that was like this:

And only showed the absolute number of trades, which told me how much the system had been forward-tested.

I am quite satisfied with the present scatter plot. Also, I am quite proud that, despite what everyone says about the sharpe ratio, I just didn't give a **** and went back to the profit factor, which no one ever mentions but that overall is better than sharpe ratio. I am proud of being a free thinker. Almost everyone else would have assumed that, because everyone uses sharpe ratio, it must be the best choice. Despite my insecurity about math, formulas... I still went for what seemed best to me. So, once again, **** you all.

[...]

As i said, this is not a formula that takes care of everything and tells you the overall quality of a system (which sharpe ratio does not do, despite what the financial community seems to think). This is just an empirical chart for me to have an overview of my systems. Having made this premise, can I tell which is my best system by looking at the chart?

NQ_ON_02 and ZN_ON_02 would seem similar in terms of return, but NQ is much more accurate. However, I like ZN better, because it's traded for months and months.

So I guess I am missing the

quantity of trades. Can I multiply it? No, nothing can be automated here, because it would not be fair to some of the systems. Just the fact that they were created 3 years ago cannot corrupt their ranking. It should be kept into account, but it cannot be incorporated into the existent rankings.

Ok. Hey, I've done more than enough for today.

[...]

One last thing. I checked the systems traded now, and I noticed that of course they also show on the new scatter plot (I didn't circle them all, but most of them - they're too crowded in there to be recognized):

But this new chart brought to the surface 3 systems that are not being traded - with purple squares around them.

I am not trading the CAD one yet, because it hasn't traded enough yet, and this doesn't show on the chart, but it showed on the chart that I used to have and that is why it never showed. The same applies to the NQ one, which also has problems of margin due to the duration of the trade. But for both, I will add them if I have a little more capital at the next scaling up.

The NG system instead has a lower accuracy and a greater leverage and so I didn't add it, because I could not afford its drawdown, maybe. Or maybe some reason I ignore. Maybe it was too correlated to the other NG systems. Oh, wait, I know. It had a huge drawdown, and the drawdown doesn't show, neither on the sharpe ratio nor on the profit factor (the sequence of trades does not matter to either). The drawdown could be a random thing, and is influenced by the duration of trading. So it should be considered but it cannot be included. Otherwise the systems that didn't trade for long or that got lucky would have an unfair advantage in the ranking.

For sure, whatever looks good on the new scatter plot will be appraised empirically the next time I'll scale up.

[...]

Ok, I am back, willing to work some more.

But before doing the empirical work, is there an equivalent of the sharpe ratio? You know, because they all say "maximize the portfolio sharpe ratio and you're set", which is not true, but I was wondering if I could assess the whole portfolio (from the individual trades or performance metrics) in a way that could anticipate the shortfall calculations I do empirically. If this were possible, then I could save time in the selection process, by first finding the systems in a theoretical way, and then double-checking empirically, but only once I find the best systems.

The first assumption I will make is that we expect no correlation and the order of trades to be random: indeed usually the resampling is worse than the backtesting, so this means random is worse. Maybe this is because I discard those systems that don't fit well together (so in some way I may even be overoptimizing the portfolio).

So, this first assumption makes me set aside once again the ingredient of "correlation" between the systems among each other, or the systems relative to the underlying future they trade.

So all I am left with is finding a method to appraise the portfolio,

before the random sampling.

They say that the portfolio with the highest sharpe ratio is the best. Since i threw it out the window and replaced it with the profit factor, now and in the next few days I will have to assess how the profit factor changes as we add systems.

I can start immediately, even though I won't be able to finish this study here.

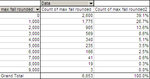

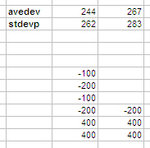

My 120 systems have an average profit factor of 10.3, because there's a guy having a profit factor of 1000 (it has a few trades).

So, I am expecting the combined profit factor to be much lower, because I'll be adding up gross profit and dividing it by the absolute value of gross loss.

And indeed it is: it is 1.08.

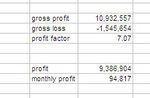

Now let's analyze the two best systems or so. And see how profit factor changes if I sum them.

According to profit factor the two best systems are undoubtedly HG_ID_07 with 4 and NQ_ON_02 and 3.5. We can't expect the combined profit factor to be 3.75, because what is weighed is the profit. Whoever made the most profit will affect the combined profit factor the most. This is different from the sharpe ratio, where the bigger system, even if it's better it doesn't matter, because its standard deviation penalizes the sharpe ratio more than its profit does. Here, if the bigger system is better, the profit factor will get closer to its individual value.

Very interesting. I was expecting HG to be the bigger one, because of its big margin and leverage. Instead it's NQ.

So I am going to divide the total gross profit by the total gross loss and I get 3.81, so this means that since gross profit and gross loss were bigger for NQ, it has been weighed more heavily.

I believe that, unlike for the sharpe ratio, if I maximize the combined (portfolio) profit factor, I will also minimize the shortfall.

But wait, maybe I am wrong.

If I add two systems and one has a huge leverage... no what matters is the size of the trades, not even the gross profit/loss of each system: what matters is the size of its trades. The smaller the better.

If trades are random, as I am assuming, and the drawdown is to be ignored (cfr. assumption a few lines above), then I have to maximize both profit factor and minimize the size of trades at the same time.

In this sense the ROI metric may even be useless or detrimental, because a lot of profit, all other things being equal, may mean bigger losses, and bigger losses mean bigger shortfalls (or whatever it's called). But this is only "all other things being equal", because a system could also achieve a lot of profit (ROI meaning) with many small trades, so ROI is indeed a quality.

Ideally, I would want a bunch of systems with high PF, high ROI, many trades and therefore...

Maybe I should re-enable the number of monthly trades, because that's more indicative... no I won't. I'd probably end up putting it all back as it is now.

Damn.

There's so many variable at play. We're talking about 3 or 4 variables multiplied by a bunch of systems.

Empirically I have now doubts: the way to go is shortfall minimization, and measuring it by % of blowing out probability.

In a theoretical way instead, I am far from figuring out the recipe. And now I'll try to take a break for the rest of the day. This is a challenge that I don't want to quit, so my mind keeps coming back to it.