Krzysiaczek99

Well-known member

- Messages

- 430

- Likes

- 1

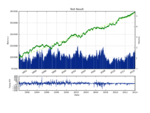

I am thinking to do the backtesting in Chaos Hunter.The problem with Chaos Hunter is I can only create buy-sell signals, and CH fitness function is '' maximize the equity'' .One can maximize the equity with large drawdowns and I dont' like large drawndowns.I like signals wih smallest MAE percent.

in MC you can make custom fitness function in java script so is much better.

At the top of it it uses easy language where is already a lot ready software

available.

Some years ago I switched from MT4, than NS than tradestation to MC

and so far is the best for me as a back testing tool. It has also build in Walk forward tester so just with a few clicks you can have proper OOS results.

Krzysztof